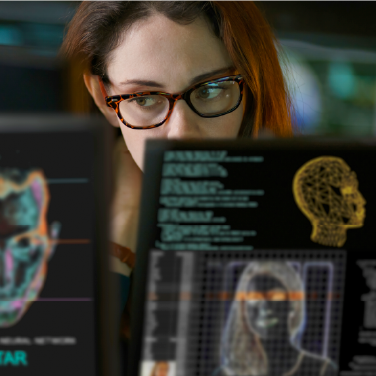

Fraud Alert: Deepfake Scams

A 2024 survey and report by McAfee Labs shows that it only takes three seconds of audio to produce a clone that’s 85% matched to the original.1 In the same survey, one in ten said they received a message from an artificial intelligence (AI) voice clone, and 77% lost money as a result. Read more about three real-life deepfake scenarios and tips on how to avoid these scams.

A 2024 survey and report by McAfee Labs shows that it only takes three seconds of audio to produce a clone that’s 85% matched to the original.1 In the same survey, one in ten said they received a message from an artificial intelligence (AI) voice clone, and 77% lost money as a result. Read more about three real-life deepfake scenarios and tips on how to avoid these scams.

1. Deepfake phone calls

Fraudsters use deepfake phone calls to appeal to the victim’s emotions and manipulate them financially, a common tactic in romance or grandparent scams. For instance, a woman in Alabama heard her great grandson’s voice saying he was involved in a car wreck and was being taken to jail.2 He requested for her to send bail money to an attorney, but the money would have gone to the scammer. Luckily, the victim found out it was fake before sending any money to the scammers.

2. Deepfake videos

Businesses are common targets of fraud attacks, and scammers tricked one employee at a multinational firm into paying $25 million after attending a video call that appeared to include the company’s chief financial officer with other staff members.3 The company discovered the scam only after the employee checked with the head office of the corporation. Scammers also use deepfake technology to impersonate government officials to request money or information. Some deepfake videos impersonate celebrities for false promotional videos and investment scams.

3. Deepfaking biometric security techniques

Biometric fraud attempts targeting financial institutions use deepfake voice and facial recognition. A report by Entrust showed deepfake selfies increased by 58% in 2025, targeting authentication services used by financial services to verify customers.4 Criminals use a variety of techniques, including 2D and 3D masks, photos of screens or printouts, and videos of photos or screens to trick the verification systems. Criminal organizations also share tactics and AI technology to stay one step ahead of detection methods.

Tips to Avoid Deepfake Scams

As AI technology becomes more sophisticated, it’s more difficult to tell what’s real from what’s fake. To avoid being a victim of deepfake audio, set a codeword with family and close friends to use as verification. This can be a phrase or question with a unique answer. For instance, you might ask, “What’s my favorite color?” and the answer would be a unique phrase that’s unrelated, like, “pineapple on pizza.”

Red flags of a deepfake scam include uncharacteristic or unexpected requests, especially those with a sense of urgency or fear. Pause before acting, and always question the source, even if it’s a text, message, or phone call that appears to be from someone you know. If you’re not sure, ask them a personal question only they would know, or contact them through another channel to verify it’s them before responding. Be careful about what information you’re sharing online and review your privacy settings on social media to limit who can see your posts.

If you think you’ve been a victim or target of a fraud attack, it helps to report the incident even if you don’t fall for the scam. Reach out to the organization or business that the deepfake is impersonating to make them aware of the fraud attempts. If the scam occurred on a social media platform, alert the platform of the violation of their policies by reporting the message as: “Scam—pretending to be someone else.” You can also report the scam to local police departments, federal agencies, or file a report with the FBI’s Internet Crime Complaint Center.

Keep in mind that Texell will never contact you asking for account or personal information. If you have any questions about your Texell account, send a secure message in Digital Banking or call or text 254.773.1604.

1 Artificial Imposters—Cybercriminals Turn to AI Voice Cloning for a New Breed of Scam from mcafee.com.3 Finance worker pays out $25 million after video call with deepfake ‘chief financial officer’ from cnn.com.

4 Deepfakes Drive 20% of Biometric Fraud Attempts from fintechmagazine.com.

If you wish to comment on this article or have an idea for a topic we should cover, we want to hear from you! Email us at editor@texell.org.